Through the course of this article, you'll get to know about Docker; what it is, why we need it, before Docker and its architecture.

This article contains info on why docker was needed. What are containers, Everything that comes under the Docker engine, what are images, and more.....

Before Docker

First one should know what servers are, and to give a basic insight into it servers work in a pair architecture known as the client-server model. A server's role in this model is to store, send and receive data from and to the client.

Its literal meaning is to serve and obey the requests being made!!!

In terms of the web, it controls the website which is being hosted and the client is the one who's taking in the resources being provided by the website.

Big MNCs or corporations have a collection of servers so that the load could be distributed evenly and also a collection of servers in a huge organization is known as a data centre.

So, earlier one application used to run on one server, if the load increased another server was bought, but it had flaws:

Earlier the knowledge of linking the same application on different servers was not known.

Not environment friendly (as more hardware!!)

Cost in-efficient

What to do now?

That's where virtual machines came in!!!

Virtual Machine

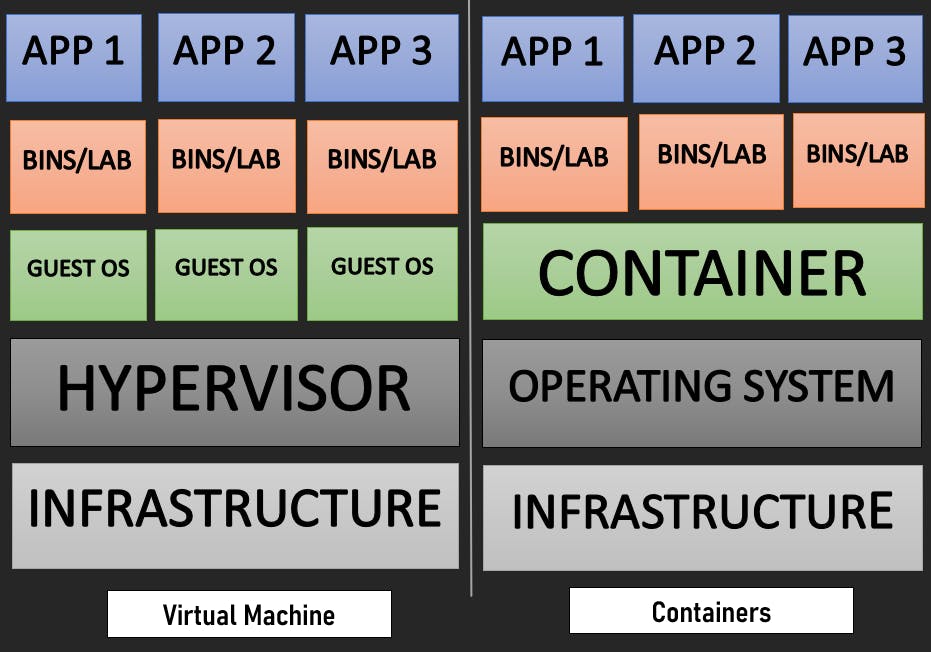

VMware solved this problem by bringing in virtual machines. In simple words, it is a type of emulation of applications running on the computer, where it allows multiple applications on one single server. We'll see a pictorial representation of what's inside a virtual machine below.

But, this too fell short due to one major reason --> It required its own operating system to work.

Think of it as a situation like you're working on macOS and you've also got Linux running too (this situation is known as dual booting). This is all safe and whatsoever but it would reduce the disk size to a large extent and would create a hindrance in the performance of your device.

What is Docker trying to solve?

The dependency on a single platform for running an application.

To reduce the time consumption in the migration of code.

Setting up a project to contribute to open source, on our local system.

Ok, these are some of the things Docker is trying to solve but how does it happen?

The answer is CONTAINERS.

Containers

In simple words, the container packages the code, so that the application runs smoothly. Docker is the "Container Engine" i.e. a tool for the container to perform its function. The picture would be clearer when the concept of Image is introduced, just wait till that if you don't understand the exact meaning of a container.

Let me show you a pictorial representation of the package:

We can see in the above picture where and what a container does, with respect to the functioning of a Virtual Machine, to get a clearer picture.

Diving into Docker as a tool

The concept of the container was there in the industry, companies like Google used a somewhat similar version of it, but it was made popular by Docker.

Google contributed the container-related technology in Linux kernel (More popular).

Now let's look at what Docker desktop has to offer:

Docker Engine

Docker CLI

Docker Compose

Docker Content Trust

Kubernetes

CLI stands for 'command-line interface'. This is the place where you interact with your computer. (Command prompt on Windows for example)

The Big 3

Runtime

This allows us to start and stop containers.

runC: It is a low-level runtime, it works with the OS to perform any function on the containers.

Container d: It facilitates the running of runC, also the interaction of the container with the network.

Engine

We use this to interact with Docker, also Docker daemon is present here. We'll look into its functionality, and how it does that will come after that, but first, we'll look into what it does.

First, the Docker CLI communicates with the server via the API. (API stands for 'Application Programming Interface' it enables the communication of a group of software) After that, the API calls to Daemon who calls runtime to perform a function which involves containers.

For eg: Docker CLI would be like "Docker run Ubuntu", which will go to the server and it would ask the runtime to do so, the runtime will forward that request to the container, and hence it would be executed.

Orchestration

Consider a large number of containers being present inside the Docker server and all of them have a version let's say V1. Now, we want to update all of them to version V3, how to do that?

Do we do that by stopping the entire application and then updating or just keep updating them one by one? ---> That's very tedious and would require constant monitoring.

What if this function is to be done on its own, without our requirement?

Here, orchestration comes into the picture. (It does a lot more, that's just the tip of an iceberg, to be honest!!!)

But, understanding the whole concept behind that, would require a whole article on itself!!!

If you've heard of the tech 'Kubernetes' before then you'll understand as Kubernetes is itself an example of orchestration!!!

Now, we'll understand the real meaning of a container and what an Image is...

Container Image

Consider the following scenario:

X lives in New Delhi, and Y resides in Pune. X is making a supercar by himself and seeing X, Y is also interested in making the same. But, the problem is that Y doesn't know how to start making the car, even though having the resources to do so. X could just send him the steps to do, and it would be preposterous to send the actual car to him !!!!

In the same way, if we're running a live application on our end and our friend also wants to run the same, then we can just give him the source code for it.

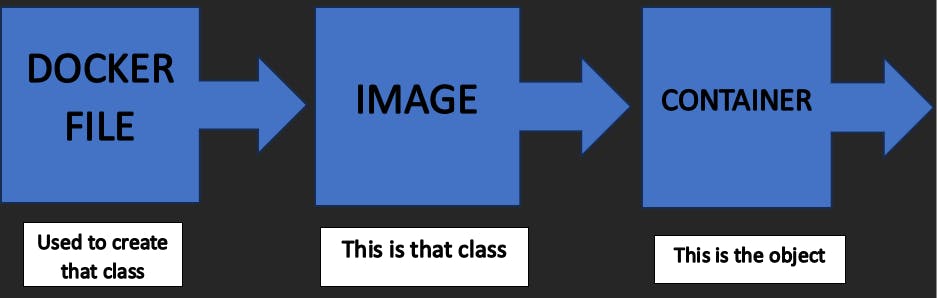

That set of instructions that would lead to the application is known as Docker Image. So, an Image is a file that contains all the set of instructions necessary for the application's running.

And if now I say that a container is the running instance of an Image would it be valid to say so? YES!!!

Just like in the above scenario X and Y have got the instructions (IMAGE) to do so, but it depends on them, what they're making of those instructions and how they're performing it (CONTAINERS), both will have different running instance of an Image.

If you have done OOPs (Object Oriented Programming), then it would be more clear. An image is a class, whereas the container is the object.

We get the basic knowledge about what exactly an Image is, in the pictorial representation of VM and Containers above we saw there is an OS below the container, what does that mean?

Working Of An Image

Like we want to run Node.js in a container. If a container doesn't interact with the outside environment, then how still Node.js would be able to run?

The image also contains OS files and dependency files for the application to run. The files required for that are pulled from the Docker Hub in the form of layers. This pulling of files is working for the CLI.

How does the CLI work?

Generally, it wouldn't find an image of hello world, but then it would download that from the Docker Hub. The pictorial representation will help you to further understand.

The architecture of Docker Engine

If we tell Docker to run Ubuntu, that would be the client's request. Daemon would be called and in turn, it calls container d using CRUD operations (Create, read, update, delete) and APIs using GRPC.

Now, GRPC stands for remote procedure calls, and it's a framework to build APIs. It provides service for an incoming request and finds an appropriate response for it. (In layman's terms!!!)

The Image would be pulled and given to container d and runc is told to create a container out of it. runC would create a container by talking to the OS, and kernels and pulling in the necessary resources required.

Importance of Docker Daemon

Till now, everything seems to be done by container d, the pulling, calling of runC and facilitating it, so by there is an extra layer of Daemon, just because it calls container d it is kept there?

No, the thing is that there are many multiple runtimes other than runC and container d like cri-o, rkt etc. This job of handling the container ecosystem can be done by these other runtimes too.

The catch is that there is only one unique Daemon and only it can manage the images, containers, and networking involved. (THAT'S THE MAIN REASON)

Container d was originally built by Docker only, but now it's part of CNCF (Cloud Native Computing Foundation).

'Daemon-less containers'

What if we want to do something with our daemon like update it for example, would this disrupt the running containers or what?

This is where Shim comes in, once the container is created runC is out of the picture (as only used for deployment and stopping of containers). If Daemon goes down, then Shim takes over the reins and looks after the container.

Above while explaining the working of an Image it is stated that the image is pulled in layers. What are these layers?

Layers

Images are built in layers and every layer is an immutable file.

For example, for pulling in Ubuntu from Docker Hub then it would download all the layers that are not on your local system. But still, why have multiple layers for that?

Consider the above example, ubuntu's file requirement to run and MongoDB's.

We see that both applications require File A to work. And also consider that we're already running Ubuntu, but now we want to work on MongoDB.

These layers cancel out the process of downloading the whole file system again and again if the same file is required for work.

Only File E and File ZU would be pulled from Docker Hub, as File A already exists in our local system.

How does the identification of layers occur?

This is done via hash value, every layer has its unique hash value that is calculated via a SHA 256 hash. While working, with an image we get to see its ID and the first 12 values of the hash are that Image's ID.

OCI (Open Container Initiative)

This was introduced by the Linux Foundation. Like, earlier in the article it was stated that there are multiple runtimes, and this programme sets basic specifications that are to be for a runtime (runtime-spec) and an Image (image-spec).

This need for basic specification happened due to the emergence of many container runtimes like container d, runC, cri-o, rkt etc.

Even we can build our own runtime, just we have to follow the underlying rules provided.

Extra

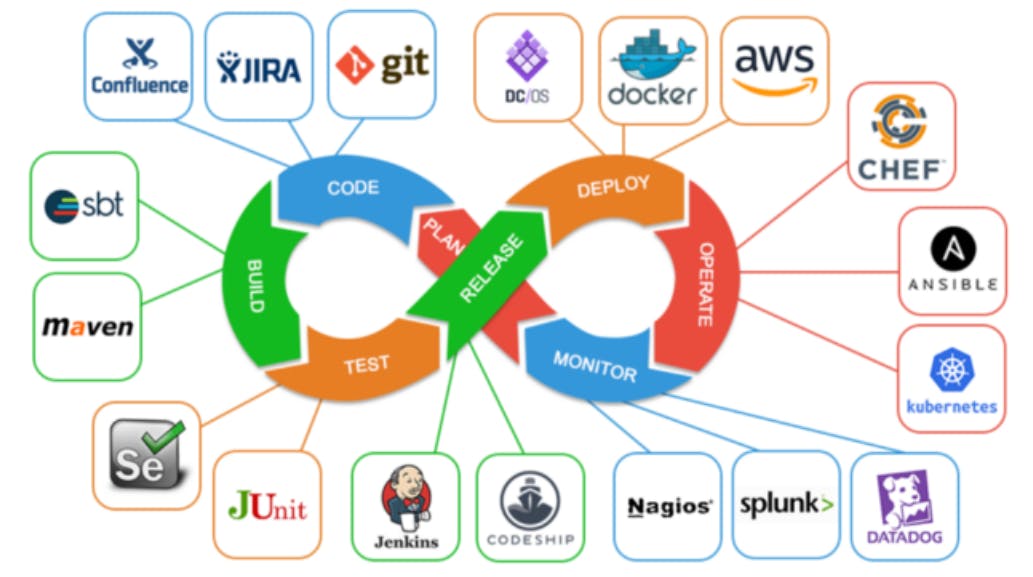

In the cycle of DevOps, Docker does the deployment phase of a product.

This is the basic theory that is enough to start working with Docker, but still much is left to tell about it.

Still, the technical part of how to exactly use Docker is left like building images, creating containers, accessing a container locally, linking containers in two different bash shells, sharing containers etc.

Which would be covered in the coming articles, till then...

Keep Creating, Keep Exploring

---> Shrey Kothari